Innovative analysis methods applied to data extracted by off-the-shelf peripherals can provide useful results in activity recognition without requiring large computational resources. We propose a framework for automated posture and gesture detection, exploiting depth data from Microsoft Kinect. Novel features are:

-

the adoption of Semantic Web technologies for posture and gesture annotation;

-

the exploitation of non-standard inference services provided by an embedded matchmaker [1] to automatically detect postures and gestures.

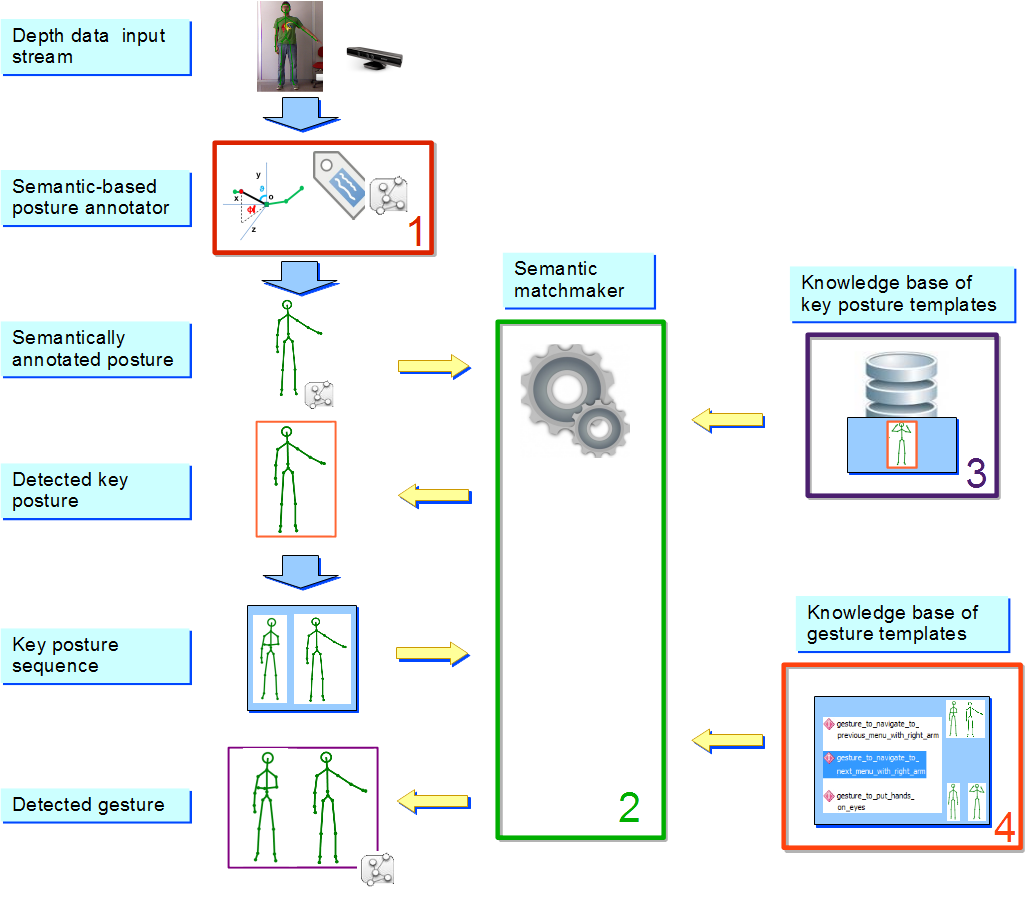

Particularly, the recognition problem is handled as a resource discovery, grounded on a semantic-based matchmaking [2]. An ontology for geometry-based semantic description of postures has been developed and encapsulated in a Knowledge Base (KB), also including several instances representing pose templates to be detected. 3D body model data detected by Kinect are pre-processed on-the-fly to identify key postures, i.e. unambiguous and not transient body positions. They typically correspond to the initial or final state of a gesture. Each key posture is then annotated adopting standard Semantic Web languages grounded on Description Logics (DL). Hence, non-standard inferences allows to compare the retrieved annotations with templates populating the Knowledge Base and a similarity-based ranking supports the discovery of the best matching posture. The ontology further allows to annotate a gesture from its component key postures, in order to enable recognition of gestures in a similar way.

The framework has been implemented in a prototype and experimental tests have been carried out on a reference dataset. Results indicate good posture/gesture identification performance with respect to approaches based on machine learning.

-

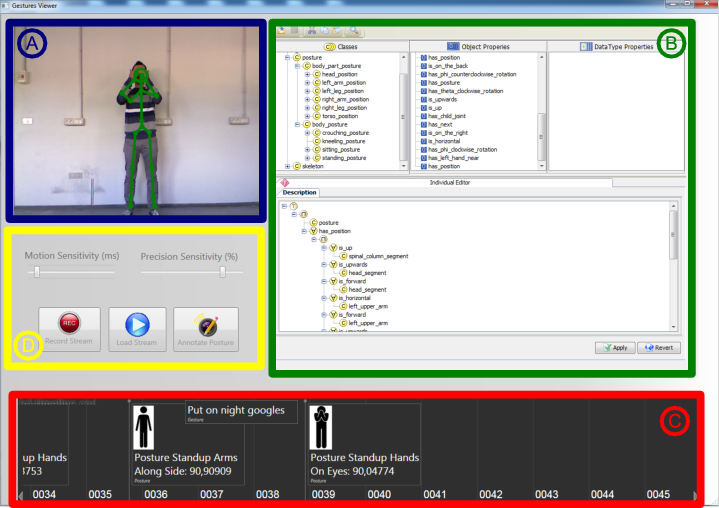

Real-time Kinect camera output with detected skeleton superimposed.

-

Sematic annotation panel, with tree-like graphical representation of the reference ontology and annotation editing via drag-and-drop of classes and properties.

-

Timeline with the sequence of recognized postures and gestures. They are processed by the embedded reasoner.

-

Toolbar and settings.

Publications

Scientific publications about Kinect posture and gesture recognition

-

M. Ruta, F. Scioscia, M. di Summa, S. Ieva, E. Di Sciascio, M. Sacco. Semantic matchmaking for Kinect-based posture and gesture recognition, international Journal of Semantic Computing, Volume 8, Number 4, page 491-514 - 2014.

-

M. Ruta, F. Scioscia, M. di Summa, S. Ieva, E. Di Sciascio, M. Sacco. Body posture recognition as a discovery problem: a semantic-based framework. The 2014 International Conference on Active Media Technology (AMT'14), Volume 8610, page 160-173, August 2014.

-

M. Ruta, F. Scioscia, M. di Summa, S. Ieva, E. Di Sciascio, M. Sacco. Semantic matchmaking for Kinect-based posture and gesture recognition. Eighth IEEE International Conference on Semantic Computing (ICSC 2014), page 15-22, June 2014.

References

-

F. Scioscia, M. Ruta, G. Loseto, F. Gramegna, S. Ieva, A. Pinto, E. Di Sciascio. A mobile matchmaker for the Ubiquitous Semantic Web. International Journal on Semantic Web and Information Systems, Volume 10, Number 4, page 77-100, 2014.

-

M. Ruta, E. Di Sciascio, F. Scioscia. Concept Abduction and Contraction in Semantic-based P2P Environments. Web Intelligence and Agent Systems, Volume 9, Number 3, page 179-207 - 2011.